Introduction

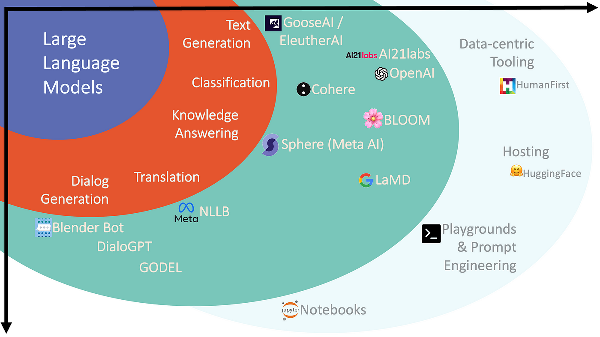

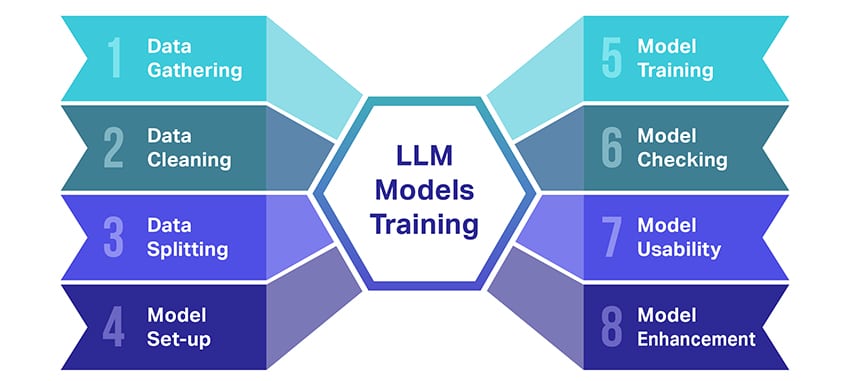

In recent years, large-scale models trained on massive datasets have made significant strides in fields such as natural language processing and computer vision. These models can handle complex tasks and perform exceptionally well in many benchmarks. This article highlights some of the latest advancements in large model research.

1. Improvements in Transformer Models

Since its introduction in 2017, the Transformer architecture has undergone numerous improvements and optimizations, enhancing its performance across various tasks. Notable models like BERT, the GPT series, and T5 have achieved remarkable results in natural language processing tasks.

2. Development of Multimodal Models

Multimodal models can process multiple types of data simultaneously, including text, images, and audio. Prominent examples such as CLIP and DALL-E have shown outstanding performance in tasks like image generation and image description.

3. Model Compression and Acceleration

While large models are powerful, they also come with high computational and storage costs. To address this issue, researchers have developed various model compression and acceleration techniques, such as knowledge distillation, pruning, and quantization, enabling the deployment of large models in resource-constrained environments.